Earlier this year, I completed a four-month long survey of 12th grade students in and around the city of Ranchi in Jharkhand, a state in eastern India. My dissertation research measures the demand for different types of post-secondary education and identifies students who may be constrained in their ability to borrow money for enrolling in such education. I also experimentally evaluate the role of information regarding the measured returns to higher education types in the region on the borrowing behavior of the students. My sample of approximately 1,500 is drawn from students across nine public intermediate colleges (institutions parallel to high-schools) that span urban and rural regions. I study student enrollment in technical/professional, general (academic) and vocational tracks in a setting where overall post-secondary attainment is low but growing and where access, fueled in part by demand, is socio-economically uneven.

In no particular order, here are four big learnings from my time in the field:

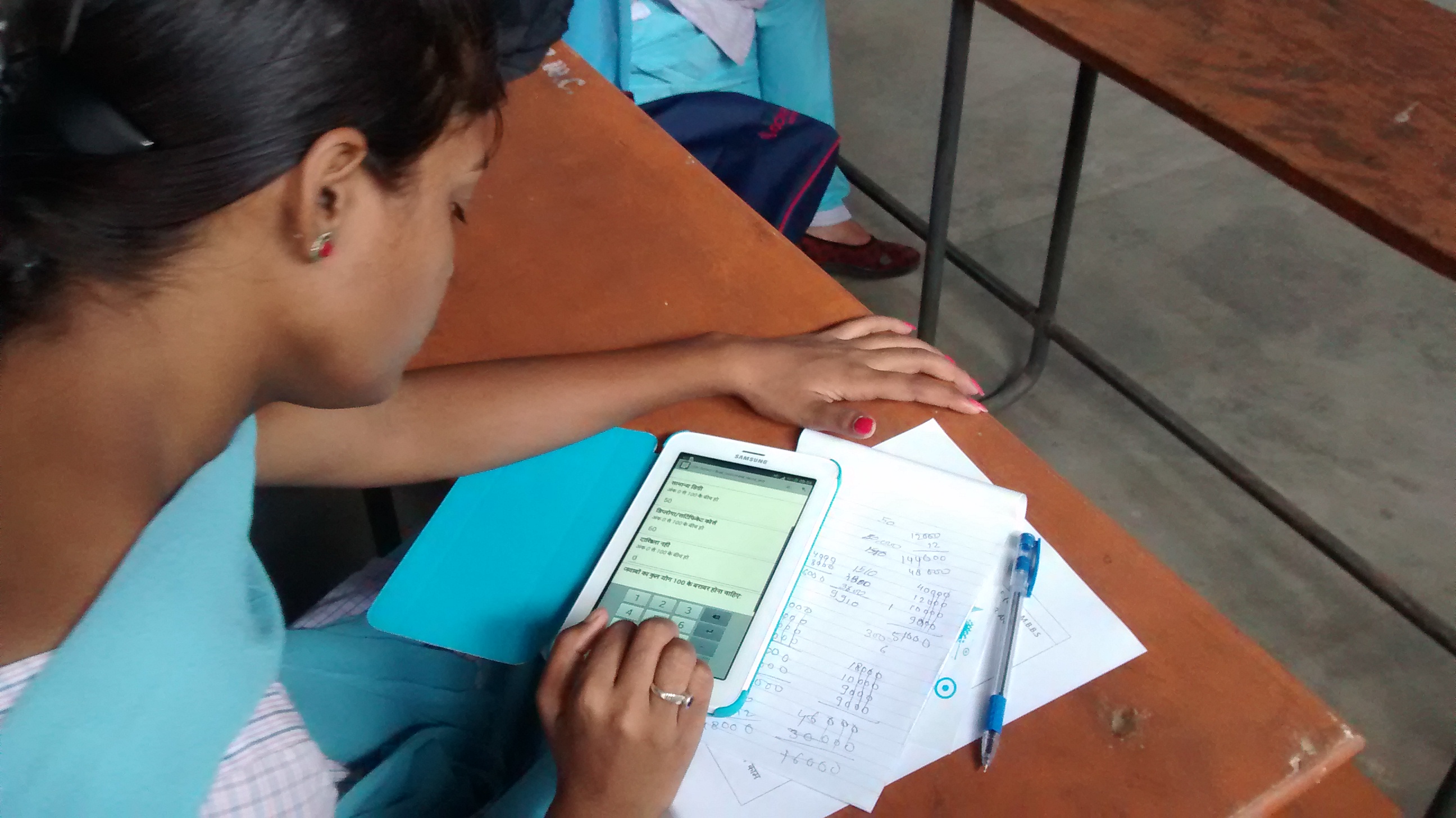

1. Leverage technology when you can

Going in, I was advised from several quarters to ditch mobile data collection in favor of more conventional paper surveys. Some of the cons of mobile-based surveys, I was reminded, include: managing and charging a large number of tablet devices, tablets being mishandled by user-respondents, their number being a binding constraint on the overall sample size and the lack of digital literacy among respondents. I am glad, however, that I stuck to my guns on the use of tablets during data collection while simultaneously addressing associated logistical pitfalls. The payoff was tremendous in terms of clean and reliable data. By using a digital survey I was able to build in constraints on responses (for instance requiring stated choice probabilities to add up to 100), avoid missing data by not allowing respondents to skip questions, easily identify students between the two survey rounds and retrieve select round-one responses for each student as they deliberated on their round-two responses, as well as collect a bunch of cool metadata, automatically, like start and end times of each survey.

By going digital I also learnt how to use OpenDataKit (ODK) an open-source (free!) and easy to use software for building, collecting and aggregating data. With no professional input and minimal coding, I was able to get my survey up and running in just a few days. Moreover, it was extremely easy to incorporate revisions in the survey instrument (my survey changed a lot after the first pilot) and look at my data as soon as it was collected to monitor glitches.

Other highly recommended survey technologies include: audio recorders to monitor enumerator effort (hat tip to Julia Berazneva for the recommendation) and pocket-sized pico projectors, which I used to display my survey instrument and to assist enumerators in explaining the survey questions to the respondents as they filled out the survey. Another extremely useful device was a portable power bank (like a UPS to which electronic devices can be connected) that lasts at least 4-5 hours and can reduce dependence on unreliable (mostly unavailable in my case) local electricity. I got something like this in India.

2. Invest in a good team

Spend time recruiting, interviewing and training a good survey team. Previous survey experience is not as important as are qualities such as professional integrity, enthusiasm, strong inter-personal and communication skills as well as being a willing learner and having some appreciation for research. No one in my team had worked in a large-scale survey, but they were all young (under 28), most were recent graduates of the university and all worked hard to ensure the timely completion of the project. Celebrating survey-milestones and doing non-work related activities together was also a big and continuous part of teambuilding!

3. There’s always plan B

Almost never do things go according to plan while in the field. Fortunately, dealing with panic attacks soon becomes second nature as you start thinking on your feet to come up with creative workarounds. Our initial strategy was to randomly sample from lists of enrolled students, but soon we realized that student-attendance in most months was less than 5% of the enrolled students. We responded by mobilizing students and spreading the word in private tutoring classes where these students took additional lessons, in student hostels, on important school days when attendance was high and once even via a local newspaper. These adaptations meant that our sampling frame and survey-timeline changed a lot, but this was better than no data at all.

Other instances when we deviated from plan A, but survived, included a complete re-vamping of our survey schedule as state-elections hit Jharkhand and public colleges were converted to polling booths. We also had an enumerator drop out mid-way, but fortunately we had trained more enumerators than we needed at the beginning, and were able to re-group quickly.

4. Navigate the inevitable political landscape

In remote areas, surveying respondents at institutions may be easier and cheaper than surveying respondents in their homes. On the flip side, you may face bureaucratic hurdles when it comes to working at institutions. Getting all permission upfront is necessary to ensure that your survey doesn’t become terminated mid-way. It is also important to work with skilled staff who understand the realities of the workings of the institutions under question. As you and your team make multiple visits to concerned authorities and put in long waiting hours, don’t get frustrated by thinking of this as time wasted. This is, indeed, time invested!

Great post! Very helpful for other researchers as you have documented everything.

Hello! Thanks for this post.

I wonder if there are agencies or companies that can help in conducting surveys, possibly in digital form, with high-school students. We are working on a new kind of concept validation/testing approach and like to know if there is need for such new kind of approach. How do we reach about 5000 students spread in about at least 3-4 Indian states? This data would provide us more confidence in our project.

Thanks in advance,

Nitin

Hi Nitin,

You might want to check out Outline India and SocialCops- those are the two companies/agencies that I know of that provide data collection services- though I’m there are many more out there.

Good luck,

Tanvi